AI-Driven Threats Targeting U.S. Organizations

AI-Driven Threats Targeting U.S. Organizations

Introduction to Malware Binary Triage (IMBT) Course

Looking to level up your skills? Get 10% off using coupon code: MWNEWS10 for any flavor.

Enroll Now and Save 10%: Coupon Code MWNEWS10

Note: Affiliate link – your enrollment helps support this platform at no extra cost to you.

Artificial intelligence has become a force multiplier across the cybersecurity landscape. U.S. organizations are investing heavily in AI to improve detection, automate response, and analyze large volumes of telemetry in real time. At the same time, threat actors are integrating the same technologies into their operations to increase speed, scale, and precision.

In the United States, where critical infrastructure, financial systems, defense contractors, healthcare providers, and technology firms operate at a massive scale, this dual-use dynamic creates a uniquely high-risk environment. AI lowers technical barriers, allowing attackers to automate reconnaissance, refine targeting, and generate convincing content with minimal effort. The result is not only more attacks, but more adaptive ones.

Recent findings reinforce this shift from theory to practice. In OpenAI’s June 2025 report on disrupting malicious uses of AI, researchers identified and banned accounts linked to multiple influence operations and cyber campaigns that relied on AI-generated social media content, automated translation, malware development support, and large-scale impersonation efforts. In one influence campaign, threat actors generated at least 220 coordinated comments to simulate organic engagement around geopolitical narratives, while other operations used AI to bulk-produce resumes for deceptive employment schemes or assist in debugging malware components.

A fake tweet generated using AI by a threat Actor (Source: OpenAI’s June 2025 report)

These cases show that AI is no longer just assisting attackers at the margins. It is being embedded directly into social engineering, influence operations, credential abuse, and even malware development workflows. For U.S. organizations, the risk is not simply increased volume. It is increased precision, automation, and adaptability. Security teams must now defend against adversaries who can generate persuasive content at scale, refine code iteratively, and coordinate cross-platform campaigns with unprecedented efficiency.

The Rise of AI-Enabled Adversaries

Threat actors are no longer relying solely on manual reconnaissance or handcrafted exploits. The Microsoft Digital Defense Report 2025 highlights that adversaries are applying generative AI to scale social engineering, support vulnerability discovery, and adapt malicious code to evade detection controls in real time. AI shortens preparation cycles and allows campaigns to move from planning to execution much faster than before.

Exploitation remains heavily tied to common entry points, but AI increases the efficiency of those tactics. Recent incident response data shows that 28% of breaches began with phishing or social engineering, while 18% stemmed from unpatched web-facing assets and 12% involved exposed remote services. AI-assisted scanning and content generation help adversaries target these weak points at scale, prioritizing high-probability entry paths across large enterprise environments.

The report also observed a significant rise in destructive campaigns targeting cloud environments, underscoring how AI-enabled reconnaissance and automation intersect with hybrid infrastructure risk. This shift is not limited to highly sophisticated groups. Widely accessible AI tools allow smaller criminal operations to generate convincing phishing lures, refine scripts, and accelerate exploitation workflows. As automation becomes embedded in attacker playbooks, U.S. organizations face campaigns that are not necessarily novel, but faster, more persistent, and increasingly adaptive.

Why Are U.S. Organizations Prime Targets for AI-Driven Cyberattacks?

Economic and Geopolitical Positioning

The United States remains one of the most economically influential countries in the world. Its financial institutions, multinational corporations, and government agencies hold vast volumes of sensitive data and intellectual property. This concentration of value makes U.S. entities attractive targets for financially motivated cybercriminals and state-aligned threat groups alike.

Geopolitical tensions also play a role. Nation-state actors often focus on U.S. organizations to gather intelligence, disrupt strategic sectors, or influence public and private decision-making processes. AI enables these actors to conduct long-term espionage and influence operations with greater stealth and efficiency.

Critical Infrastructure Exposure

U.S. critical infrastructure is no longer protected by physical perimeters alone. Energy grids, telecommunications, transportation, healthcare, and other essential services increasingly run on hybrid environments that blend on-prem systems, public cloud platforms, and third-party services. The Cloud of War report warns that this shift has expanded the number of entry points and increased the blast radius of a single compromise across cloud-connected supply chains.

The report also ties this exposure to measurable growth in AI-enabled activity. It states that AI-assisted cyberattacks have risen by nearly 2,200% since 2022, and that penetrations of cloud networks have increased by over 130% since critical infrastructure operators leaned more heavily on interconnected cloud and cellular networks.

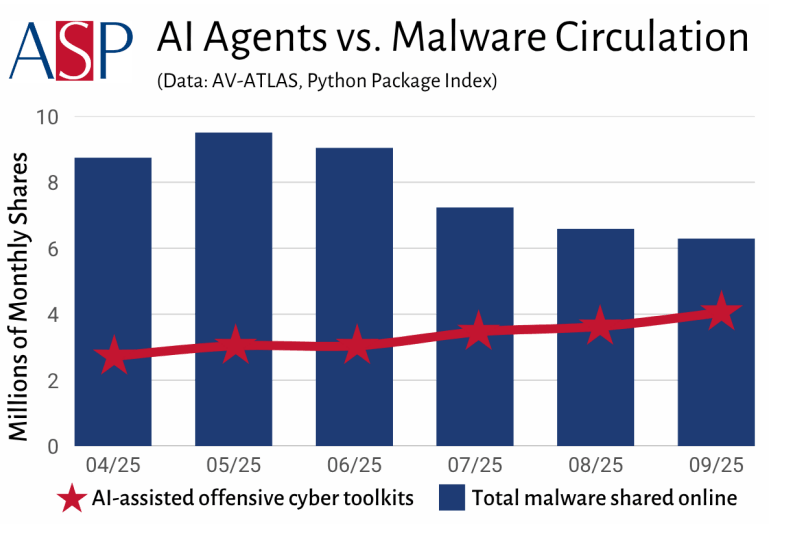

In parallel, the report notes a surge in offensive tooling adoption, citing more than 21.4 million downloads of AI-assisted offensive cyber software from March to September 2025.

AI-assisted offensive cyber toolkit downloads continue to rise in 2025, while traditional malware circulation declines, signaling a shift toward automated and agent-driven attack models. (Source: The Cloud of War report)

What makes this especially risky for infrastructure operators is the report’s emphasis on agentic capabilities. It describes AI cyber agents that can autonomously conduct reconnaissance, adapt to new environments, and in some cases modify settings without constant oversight.

In real-world terms, attackers can identify exposed perimeter systems, exploit weaknesses faster, and move laterally through implicitly trusted connections across hybrid networks. As cloud sprawl grows and legacy operational technology remains difficult to modernize, U.S. infrastructure becomes increasingly exposed to faster, more scalable campaigns that are difficult to contain once they start.

Defense and Technology Leadership

The United States leads in advanced technology research, defense innovation, and emerging industries such as artificial intelligence, aerospace, and semiconductor manufacturing. This leadership position makes U.S. organizations high-value targets for intellectual property theft and strategic espionage.

AI-driven attacks enable adversaries to sift through massive volumes of stolen data more efficiently, identify high-value research assets, and extract actionable intelligence faster than manual analysis would allow. For companies operating at the forefront of innovation, the threat is not only operational disruption but also long-term competitive and national security impact.

How Are Threat Actors Using Artificial Intelligence in Cyber Operations?

Artificial intelligence is no longer theoretical in cybercrime operations. Threat actors are actively embedding AI into reconnaissance, exploitation, and post-compromise workflows. The Microsoft Digital Defense Report 2025 notes that adversaries are already using generative AI to scale social engineering, assist vulnerability discovery, automate lateral movement, and adapt payloads in real time to evade detection.

Instead of replacing traditional tactics, AI enhances them by increasing speed and reducing operational friction. It allows attackers to test defenses, refine delivery methods, and compress timelines between exposure and exploitation.

Are AI-Generated Phishing and Deepfake Scams Increasing?

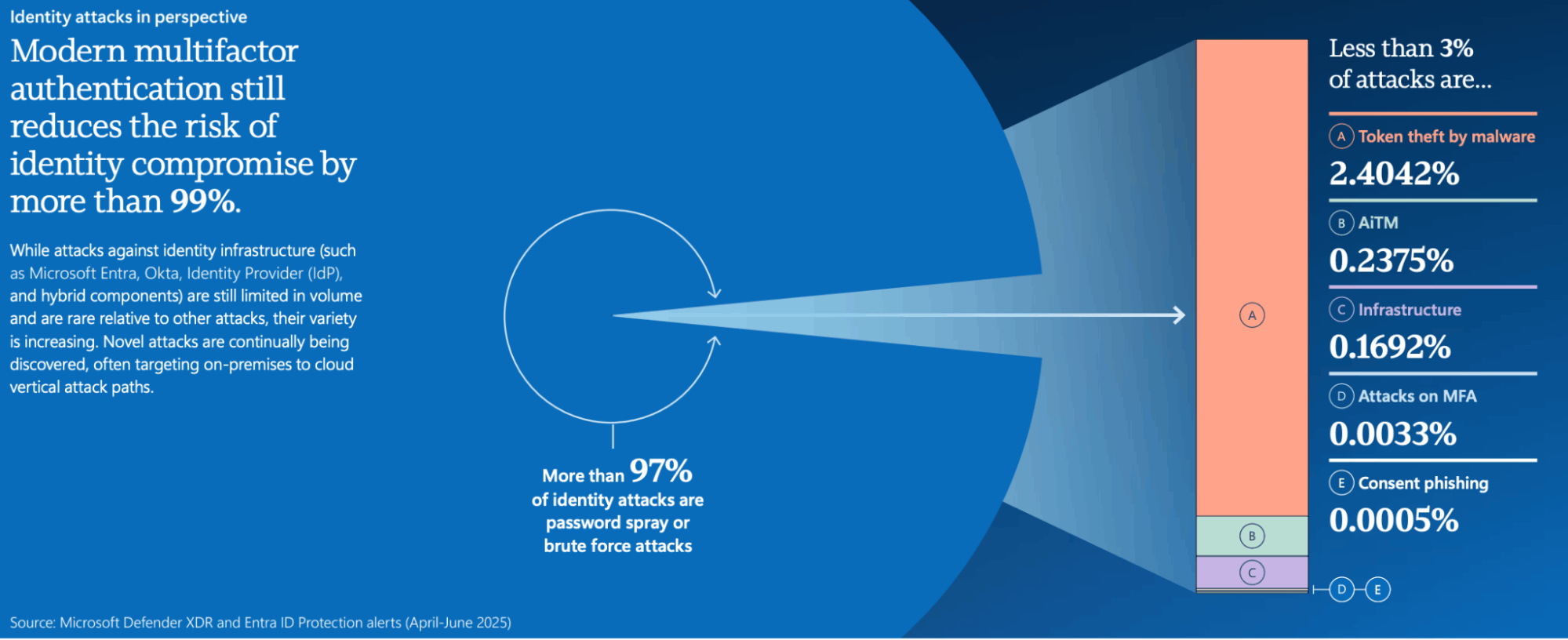

Yes, and the data supports it. Identity-based attacks rose by 32% in the first half of 2025, reflecting a growing focus on credential access and impersonation. The report also highlights the rise of deepfakes and synthetic identities, showing how AI is fueling identity fraud at scale.

While MFA blocks over 99% of identity compromise attempts, attackers are increasingly shifting toward AI-driven phishing and impersonation tactics. (Source: Microsoft Digital Defense Report 2025)

AI-generated phishing and impersonation now commonly include:

- Spearphishing emails tailored to internal tone and recent company activity

- Voice cloning is used to impersonate executives in urgent payment requests

- Video manipulation to support business email compromise scenarios

- Context-aware language that avoids the typical red flags of traditional phishing

Unlike mass spam campaigns, these operations are targeted and adaptable, increasing success rates against U.S. enterprises.

How Is AI Accelerating Vulnerability Discovery and Exploitation?

AI is increasingly supporting automated scanning and exploit prioritization. The report confirms adversaries are incorporating AI into vulnerability discovery workflows to identify weaknesses in widely deployed systems. Incident response data shows that:

- 18% of breaches began with the exploitation of unpatched web-facing assets

- 12% leveraged exposed remote services

AI helps attackers correlate exposed infrastructure with known CVEs, prioritize high-value targets, and reduce the time between vulnerability disclosure and exploitation. For U.S. organizations managing large hybrid environments, that acceleration narrows defensive response windows.

Can AI Automatically Generate Malware and Ransomware Variants?

AI does not eliminate human operators, but it reduces development effort and increases adaptability. The Microsoft report describes adversaries experimenting with AI-powered agents capable of rewriting payloads dynamically to evade detection systems.

AI-assisted malware development can support:

- Code rewriting to bypass signature-based detection

- Rapid generation of script-based loaders

- Obfuscation adjustments to evade endpoint monitoring

- Variant creation within Ransomware-as-a-Service ecosystems

This results in more frequent variant refresh cycles, making static detection increasingly unreliable.

How Are Criminal Groups Using AI to Scale Social Engineering?

Social engineering remains a dominant access vector. Recent investigations show that 28% of breaches began with phishing or related social engineering methods. AI amplifies this model by increasing scale and realism.

Threat groups are combining AI with multi-stage attack chains that may include:

- Email bombing to create urgency

- Voice phishing calls impersonating IT support

- Platform impersonation across collaboration tools…

For U.S. organizations, the concern is not only higher attack volume but improved precision. AI allows criminal groups to produce convincing, highly targeted content on demand, lowering the cost of deception while increasing its effectiveness.

Can Security Teams Use AI to Counter AI-Powered Threats?

Yes, but success depends on how AI is operationalized, not simply adopted.

Threat actors are using AI to automate reconnaissance, scale phishing campaigns, and refine malware development. Security teams can apply similar techniques to strengthen detection and reduce response time. When properly integrated, AI helps defenders shift from reactive monitoring to proactive risk identification.

In practical terms, AI can support:

- Behavioral detection across identity, endpoint, and cloud environments

- Identification of anomalous login patterns and impossible travel events

- Automated phishing analysis that evaluates context, tone, and metadata

- Rapid enrichment of threat indicators across multiple telemetry sources

- Intelligent prioritization of vulnerabilities based on exposure and exploitability

Where attackers use AI to generate deception, defenders can use it to detect subtle inconsistencies at scale. Where adversaries automate scanning, security teams can automate exposure discovery. The balance is no longer about who has AI. It is about who integrates it more effectively into daily operations.

However, AI does not replace fundamentals. Strong identity controls, enforced multifactor authentication, network segmentation, asset visibility, and disciplined patch management remain foundational. AI strengthens these controls, but it cannot compensate for gaps in basic cyber hygiene.

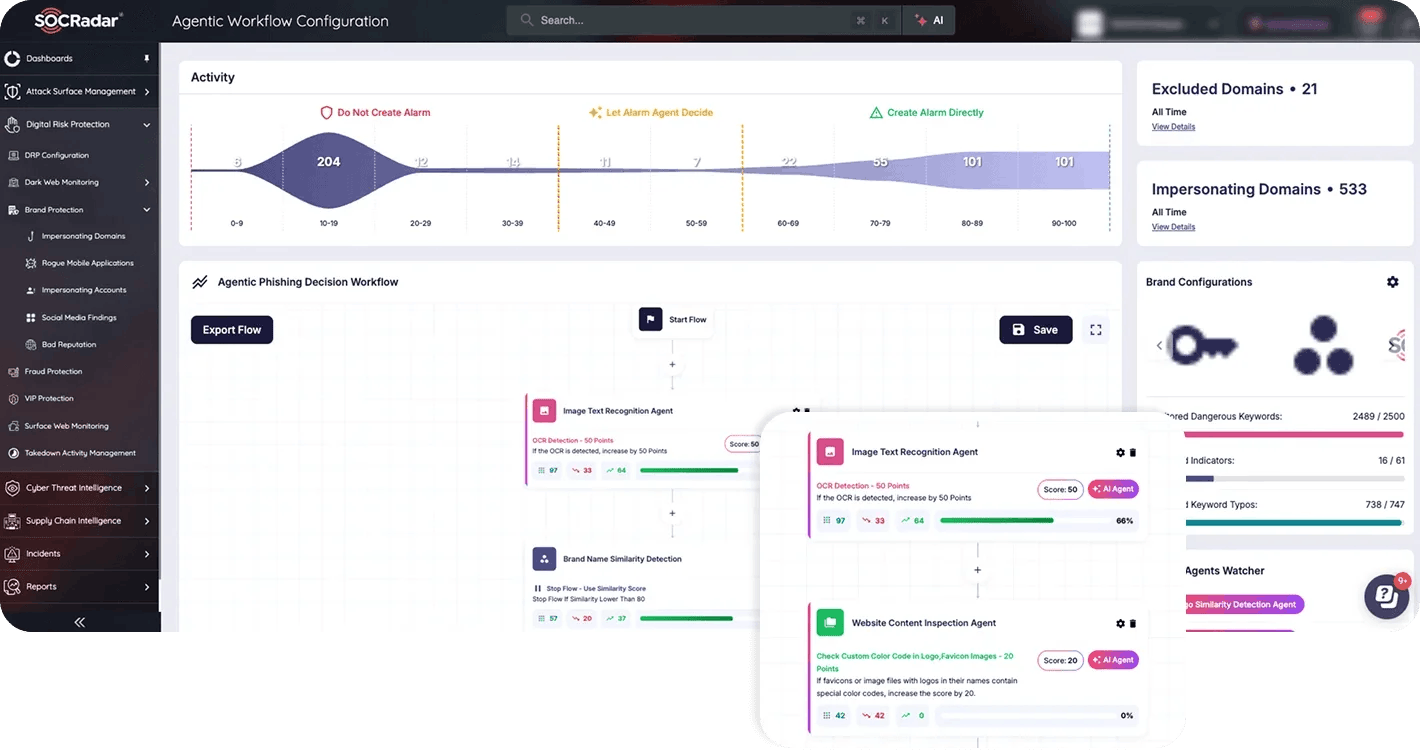

Redefine Your Cyber Defense with SOCRadar Agentic Threat Intelligence

AI-powered threats require intelligence that operates at machine speed. SOCRadar Agentic Threat Intelligence moves beyond passive feeds by deploying AI agents that analyze context, triage alerts, and trigger action automatically.

SOCRadar Agentic Threat Intelligence module

With Agentic TI, security teams can:

- Automate investigation and response at scale

- Reduce alert fatigue and false positives

- Deploy specialized agents for phishing, brand abuse, credential leaks, and more

- Turn threat intelligence into real-time operational outcomes

As attackers automate their operations, defenders must do the same. Agentic Threat Intelligence enables security teams to shift from reactive monitoring to autonomous, AI-driven defense.

How Will AI Reshape the U.S. Cyber Threat Landscape in the Coming Years?

AI is likely to reshape cyber threats through acceleration rather than reinvention.

Most successful attacks still rely on familiar techniques such as phishing, credential abuse, vulnerability exploitation, and lateral movement. What AI changes is the scale and speed at which these techniques can be deployed. Campaign preparation cycles shrink. Content becomes more convincing. Target selection becomes more precise.

In the coming years, U.S. organizations can expect:

- More personalized social engineering driven by data aggregation and generative models

- Faster adaptation of malware variants to evade detection controls

- Increased targeting of identity systems and cloud environments

- Automated reconnaissance of publicly exposed infrastructure

- Greater blending of cyber intrusion and influence operations

As a global technology leader and a primary geopolitical target, the United States will likely remain a focal point for AI-enabled experimentation by both criminal groups and state-aligned actors. AI lowers technical barriers, meaning smaller groups can operate with capabilities that previously required larger teams.

The long-term impact will not be a sudden transformation of cyber tactics, but a steady normalization of AI-assisted operations. Organizations that prioritize visibility, identity resilience, automation in detection, and continuous exposure monitoring will be better positioned to operate in this faster, more adaptive threat environment.

While this post focused on how artificial intelligence is accelerating cyber operations, automation does not operate in a vacuum. Many of the same identity abuse, telecom targeting, supplier pivoting, and recruitment-based lures discussed here are already visible in state-linked campaigns targeting the United States.

For a detailed breakdown of the nation-state clusters behind those operations, see our companion analysis: “Top Nation-State Cyber Threats Targeting the United States.” Together, these perspectives explain both the technology shift and the actors applying it across U.S. infrastructure, defense, and critical sectors.

Article Link: https://socradar.io/blog/ai-driven-threats-targeting-u-s-organizations/

1 post - 1 participant

Malware Analysis, News and Indicators - Latest topics